I have successfully defended my Ph.D. thesis and joined Amazon Lab126 as an Applied Scientist. I continue working on challenging problems in 3D computer vision and deep learning for real-world applications. I am a PhD candidate in the Department of Electrical and Computer Engineering at the University of Rochester, working with Professor Gaurav Sharma. I received the M.S. degree in electrical and computer engineering from the University of Rochester in 2017, and the B.S. degree in electrical engineering from the Harbin Institute of Technology in 2015.

My research interests span the field of computer vision and machine learning, with their applications to medical imaging, robotics, AR/VR, and digital heritage. I am particularly interested in 3D range data processing, multi-view geometry, 2D/3D registration, annotation-efficient deep learning, and semantic segmentation.

I have spent a great time working as a research intern at Facebook Reality Lab, Mitsubishi Electric Research Laboratories (MERL), and AIG.

CV | Google Scholar | GitHub | LinkedIn | Email

Projects

Deep Retinal Vessel Segmentation For Ultra-Widefield Fundus Photography [project page]

Deep Retinal Vessel Segmentation For Ultra-Widefield Fundus Photography [project page]

We propose an annotation-efficient method for vessel segmentation in ultra-widefield (UWF) fundus photography (FP) that does not require de novo labeled ground truth. Our method utilizes concurrently captured UWF fluorescein angiography (FA) images and iterates between a multi-modal registration and a weakly-supervised learning step. We construct a new dataset to facilitate further work on this problem.

Deep Retinal Vessel Segmentation For Fluorescein Angiography [project page]

We propose a novel deep learning pipeline to detect retinal vessels in fluorescein angiography, a modality that has received limited attention in prior works, that reduces the effort required for generating labeled ground truth data. We release a new dataset to facilitate further research on this problem.

DeepMapping: Unsupervised Map Estimation From Multiple Point Clouds [project page]

We propose a novel registration framework using deep neural networks (DNNs) as auxiliary functions to align multiple point clouds from scratch to a globally consistent frame. We use DNNs to model the highly non-convex mapping process that traditionally involves hand-crafted data association, sensor pose initialization, and global refinement. Our key novelty is that properly defining unsupervised losses to “train” these DNNs through back-propagation is equivalent to solving the underlying registration problem, yet enables fewer dependencies on good initialization as required by ICP.

Fusing SfM and Lidar for Dense Accurate Depth Map Estimation [project page]

We present a novel framework for precisely estimating dense depth maps by combining 3D lidar scans with a set of uncalibrated camera RGB color images for the same scene. The approach is based on fusing structure from motion and lidar to precisely recover the transformation from 3D lidar space to 2D image plane. The 3D to 2D map is then utilized to estimate a dense depth map for each image. The framework does not require the relative position of lidar and camera to be fixed and the sensor can conveniently be deployed independently for data acquisition.

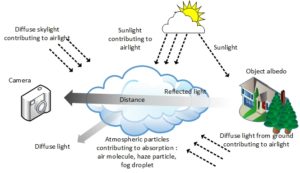

Hazerd: An Outdoor Scene Dataset and Benchmark for Single Image Dehazing [project page]

We provide a new dataset, HazeRD (Haze Realistic Dataset) for benchmarking dehazing algorithms under realistic haze conditions. HazeRD contains ten real outdoor scenes, for each of which five different weather conditions are simulated. All images are of high resolution, typically six to eight megapixels.

Local-Linear-Fitting-Based Matting for Joint Hole Filling and Depth Upsampling of RGB-D Images [project page]

We propose an approach for jointly filling holes and upsampling depth information for RGB-D images, where RGB color information is available at all pixel locations whereas depth information is only available at lower resolution and entirely missing in small regions referred to as “holes”.

Point Cloud Analytics and Application: Architectural Biometrics [project page]

Architectural Biometrics is a platform that addresses the lack of tools for comparative analysis of spatial data. The platform is inspired by research on the Canadian and Ottoman railways, both of which include an array of prefabricated building designs that display fascinating dissimilarities. It allows users to compare similar objects and to develop a better understanding of human agency in the analyses.