Henry Lin

Advisor

Adam Purtee

Committee Members

Hangfeng He, Dan Gildea

Abstract

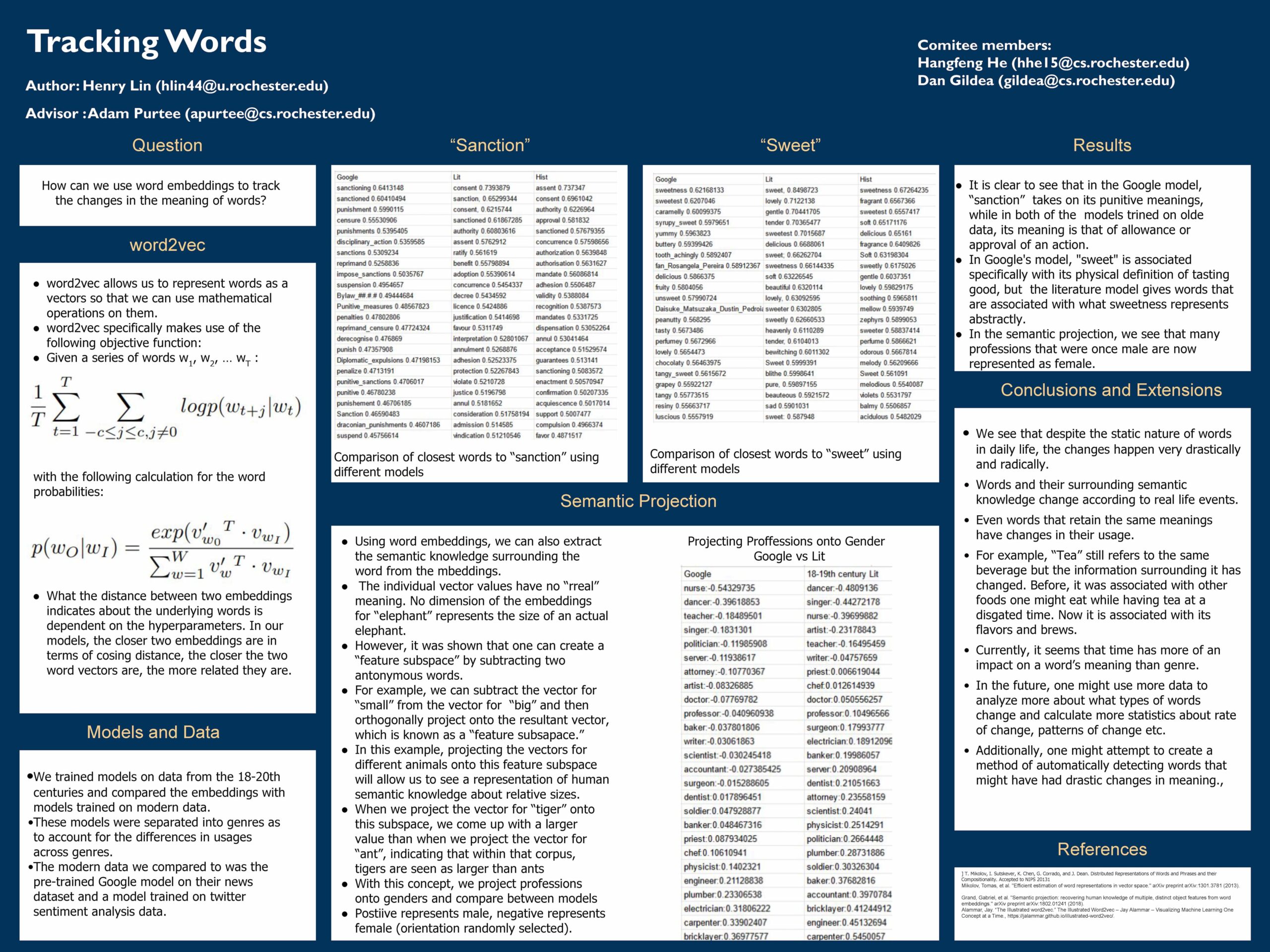

In this report we present findings of a project that studies word meaning changes over time. Computational language models typically focus on modelling the fluency and grammaticality of the language. They do not model how words change their meanings. Compared to word meanings, the grammar of a language, say that of English, does not change nearly as fast or as much as word meanings. Thus, mixing data from different time periods does not pose a problem for building conventional language models. However, word meanings are much less stable than grammar, and studying their changes helps us gain insight into not only how the words change but also the factors that engender these changes. This project focuses on studying these changes through building word embedding models and analyzing these embeddings by methods such as semantic projection. Our findings show that words not only change meaning, but that they change meanings faster and more drastically than perceived. We also find that even when the meaning of a word does not change much from its denotational semantics, its association with other words still changes greatly over time.