Team Members

Forest Davis-Hollander

Bright Ine

Supervisors

Jack Mottley, Daniel Phinney

Description

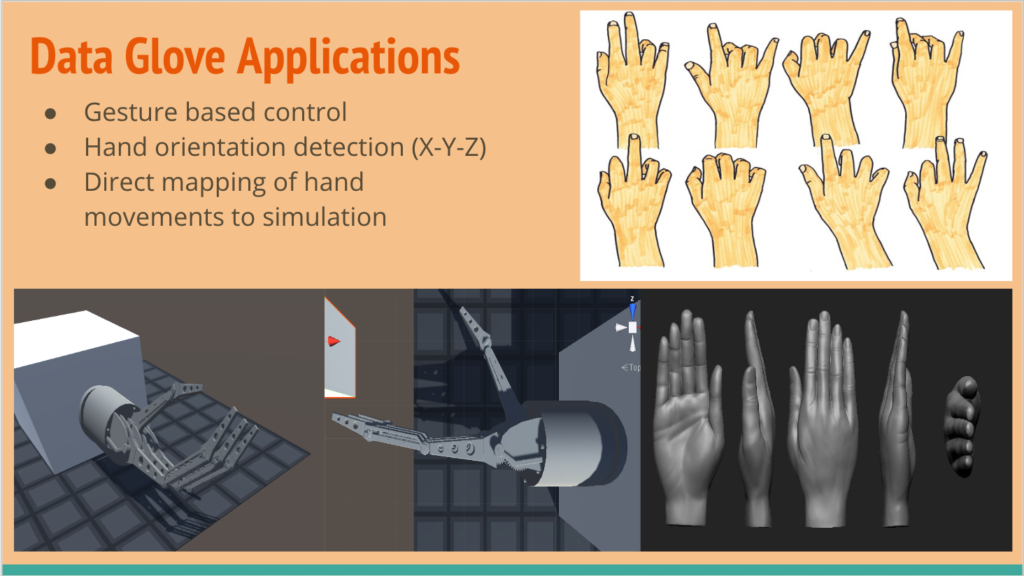

Our project is a “Data Glove” worn by a user and composed of several types of sensors for mapping human hand motion. A virtual environment is used to show the glove’s capabilities in capturing natural motion, with its primary purpose being for virtual reality applications. We use the Unity game engine to create our simulation and feed data from our glove to a simulated robotic hand. Ideally, this is completed in real time and can capture movements live.

Original Project Design

Our goal was to build a data glove that was capable of capturing human hand movements and simulating this control of a robotic hand using Gazebo ROS. This project would ultimately be used for more accurate video gaming, virtual reality, or real-life applications such as cleaning up toxic waste, remote surgery, or bomb disposal. Our idea for the glove was that it could gather input from a variety of sources, including a gyroscopic sensor, force sensors, flex sensors, and an RBG camera for motion confirmation. It would use a microcontroller to process the data before sending it back to a central computer in order to display and run the simulation. The glove would also give haptic feedback to the user’s fingertips based on the gripping force in the simulation.

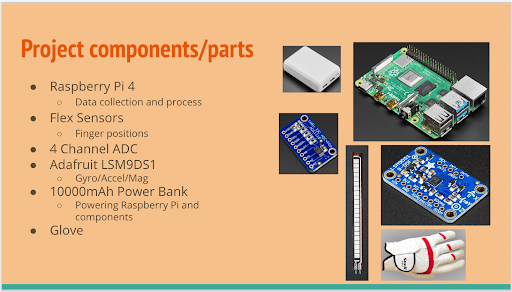

Revised Project Design

Our revised design uses a Raspberry Pi 4 reading input with Python scripts from an analog to digital converter (ADC). The ADC, in turn, receives the analog signal output from our flex sensors by measuring the voltage drop across a voltage divider composed of the flex sensor and resistors. Our design for the sensor layout was to use two flex sensors for each finger. One sensor would track the relative change in motion between the back of the hand and the first joint of the finger. The second sensor would then span the space from the first joint all of the way to the end of the finger, as shown in Figure 1. This would provide simulated motion to capture the movement of each of the two tendons in human fingers. In addition, we wanted to map the motion of the wrist and arm in general. For this purpose, we used a combined accelerometer/gyroscope/magnetometer sensing unit. Our initial plan was to use the accelerometer to track the motion of our glove in space, while using the gyroscope to track the orientation of the hand, and even possibly use the magnetometer for reading the thumb motion by placing a magnet on the end of the thumb. Using more Python code, we interacted with both the ADC and multi-sensor unit using I2C.

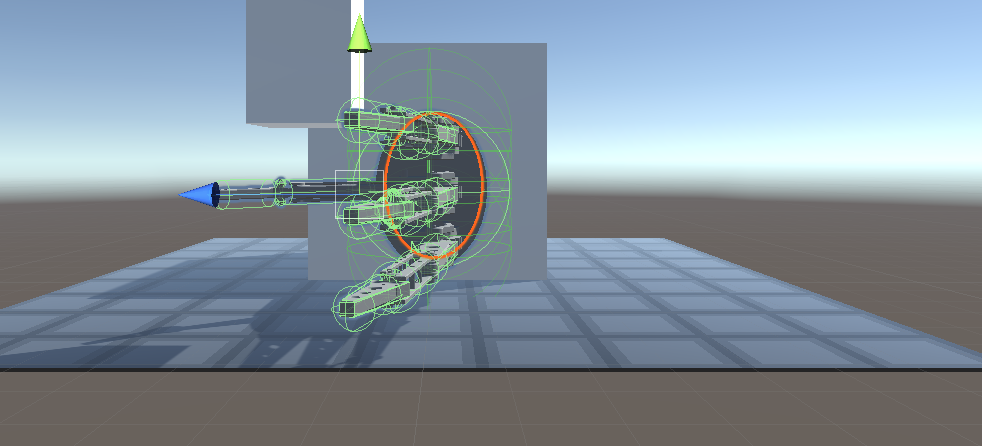

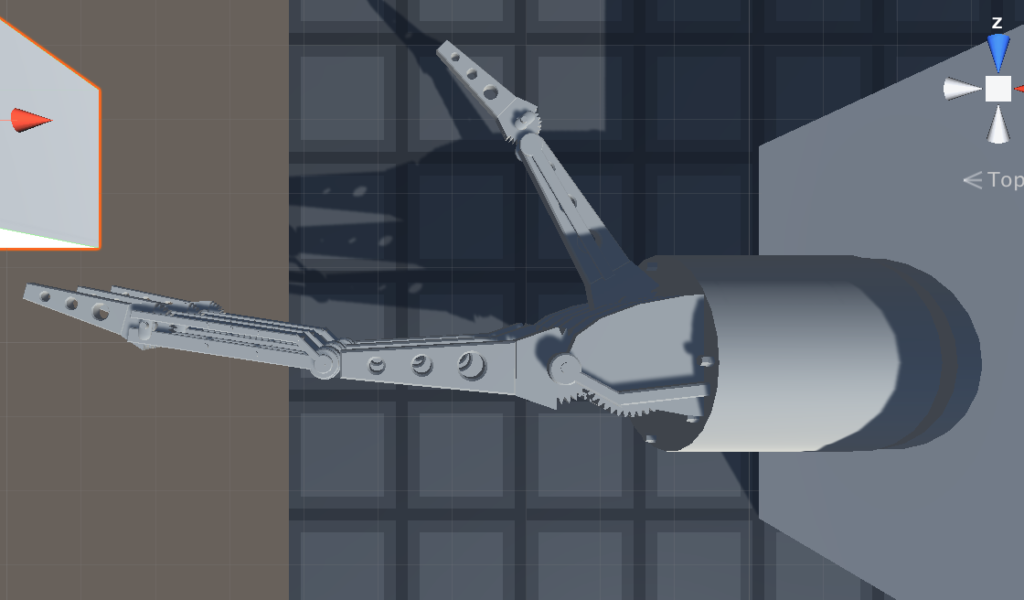

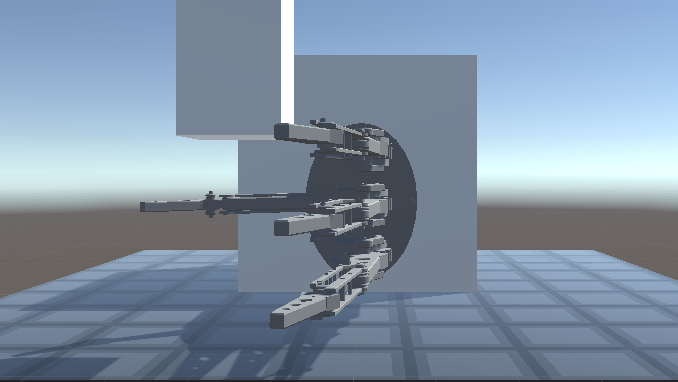

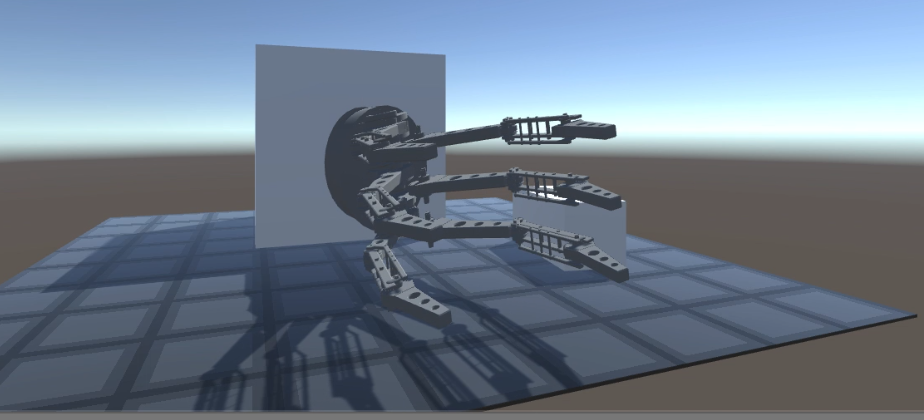

The software side of our project consisted of creating a robotic hand model within the Unity game engine. This involved taking apart existing open-source projects and re-purposing their parts to build a new model. Two designs for the model were built, with one resembling a human hand but with only three fingers, and another resembling a robotic gripper hand with three fingers facing each other. Both models were designed to take input from either a keyboard or another external source to manipulate the upper and lower fingers, as well as the thumb and wrist. In order to make the hand more realistic, constraints in motion were added to match the general constraint of the human hand and as closely simulate a real robotic hand as possible, as well as force colliders to accurately show when the fingers gripped an object. The basic task of the simulation is to receive input from either a text file generated by the Raspberry Pi with the sensor data, or take in live input via a TCP server. In this manner, the glove could be used to manipulate the hand and grip a block in the simulated environment.

Simulation Demonstration

Photo Gallery