Team Members

Paula Sedlacek, Audio and Music Engineering ’24

Qixin Deng, Audio and Music Engineering ’24

Jay Peskin, Audio and Music Engineering ’24

Tianyu Qi, Audio and Music Engineering ’24

Mentors

Michael Heilemann, Tre DiPassio, Dan Phinney, Sarah Smith

Project Description

Electric wheelchairs require users to interact with a joystick, alienating individuals with compromised motor skills. A compact and inexpensive voice-controlled device that can interface with the joystick of electric wheelchairs can assist users with limited mobility options. This project aims to design an attachment for powered wheelchairs that can control the chair’s joystick in response to a user’s voice commands. The attachment is meant to be a relatively inexpensive add-on unit to an already functioning powered wheelchair, and the unit should fit various available chairs. The project will require a system for capturing the user’s voice, processing the captured audio to interpret the user’s commands, and a mechanism for controlling the joystick based on what the user said. The device must be compact and inexpensive without compromising functionality and safety.

Design

Block Diagram

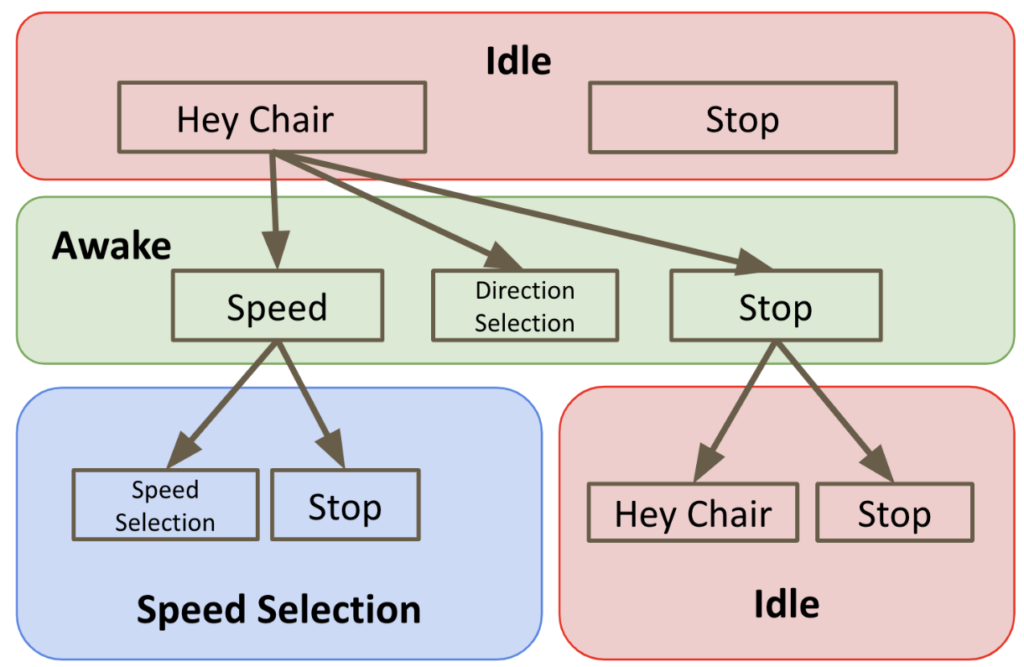

The block diagram demonstrates the underlying logic of the speech recognition of WheelTalk.

Starting at the top left, the diagram shows that the “stop” function of the WheelTalk module is completely independent of all other functions. No matter what is happening, if the user gives the “stop” command then the chair will stop immediately.

The red diamond shows the IDLE mode. When the module is in this state, it is only listening for “stop” and “hey chair”, the wake word. Once the wake word is given, it moves on to the green diamond: AWAKE mode.

From here, the user can move the chair at its default speed by giving one of the direction commands: “forward”, “back”, “left”, and “right”. This will move the joystick of the chair in the appropriate direction. The user can also change the speed of the chair to 1, 2, or 3 by saying “speed”, the blue LED will light up, and then select a speed. This changes the amount that the joystick will move in the given direction.

In addition, any command (besides “stop”) resets a timer. If no new command is given within ten seconds of the previous command, the timer will reach its maximum and revert the module back to IDLE mode.

Hardware

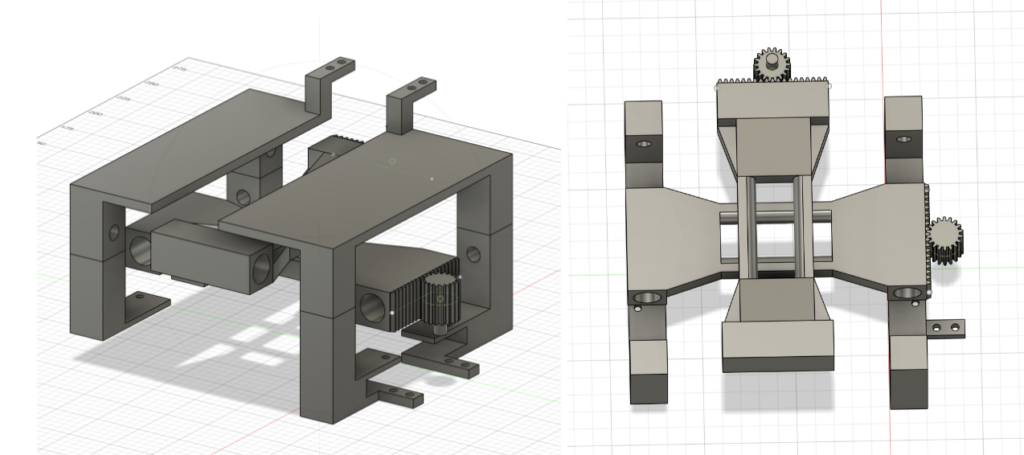

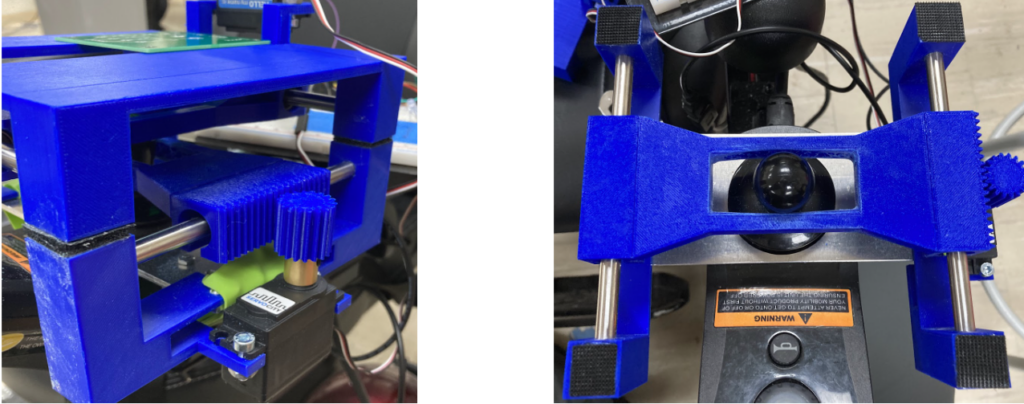

Joystick Controller

The joystick controller has five main components: the brackets, rack and pinion gears, linear bearings, servo motors, and the outer cage. Most joystick controller components were designed using the CAD software Fusion 360 and printed using a Prusa i3 3D printer.

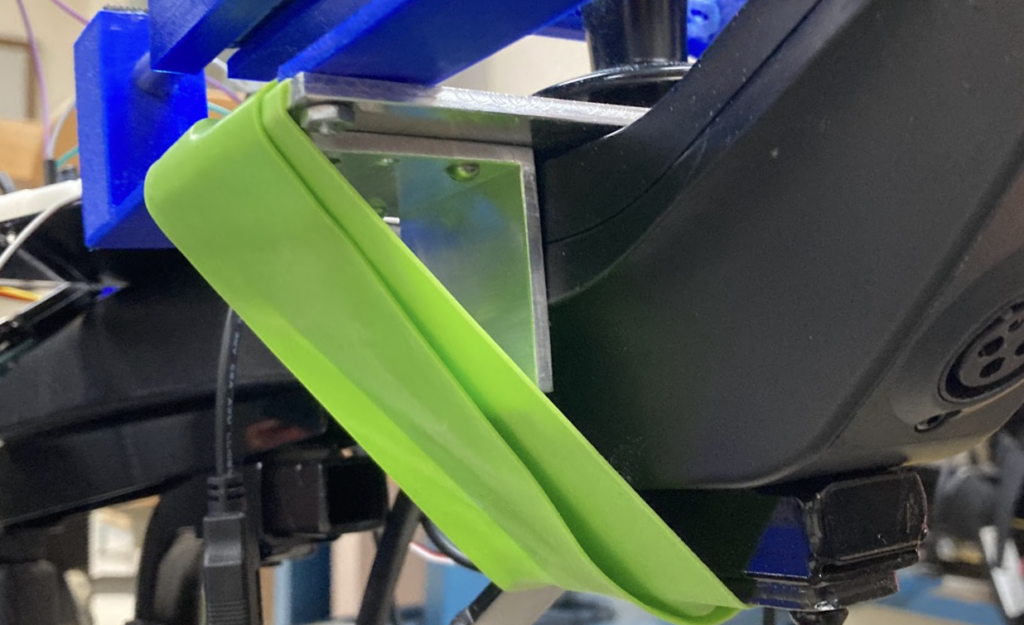

Mount

The mount secures the joystick controller onto the wheelchair’s joystick and is predominantly made of metal. It sits atop the arm of the wheelchair, with the joystick protruding from the center of the mount. The joystick controller is screwed on the surface of the mount. An elastic band connected to the mount surrounding the arm of the wheelchair ensures that the mount is secure in different axes.

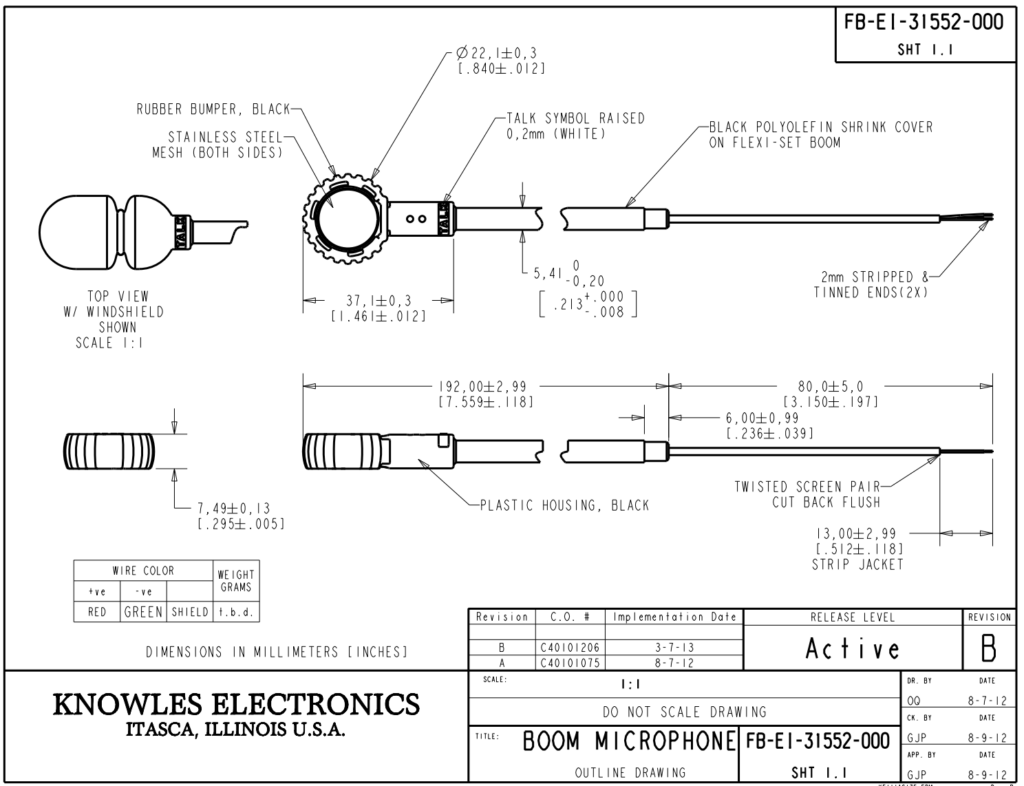

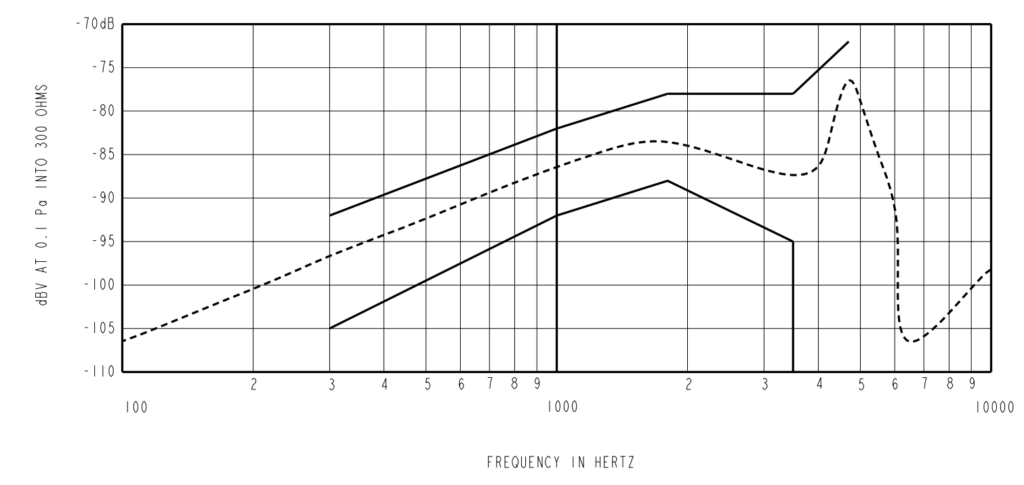

Microphone

Knowles FB-EI-31552-000 boom microphone is selected as the audio input. The Knowles is an electro-mechanical microphone that does not require biasing. The microphone will not detect audio unless the sound source is in close proximity to the microphone. The microphone features are listed below

- Type: Electro-mechanical

- Polar Pattern: Bi-directional

- Impedance: 300 Ohm

- Frequency Response Range:300-5k Hz

- Sensitivity @ 1 kHz: -67 dBV

- Humidity Tolerance: 50%

- Connection: 2 Wire

Software

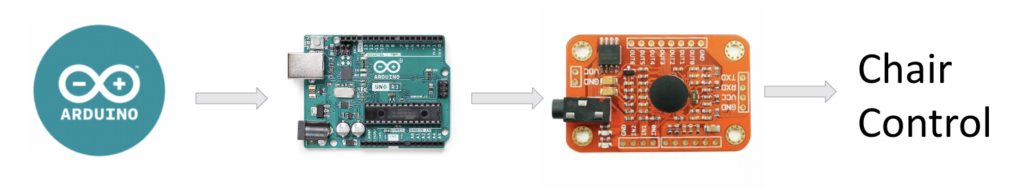

Microcontroller

For the voice recognition module, we chose the Elechouse V3, which is a compact voice recognition module that operates either via serial ports or built-in GPIO pins. It can store up to 80 voice commands and recognizes commands by comparing them to pre-recorded samples. However, the module presents a limitation of only loading up to seven of the stored commands each time.

The Arduino IDE is utilized to train the VR3 module and to control LEDs and motors based on the recognized voice commands. To address the limitation, the program is designed to dynamically load and clear commands within a conditional loop, which is detailed in the hierarchical graph underneath.

Speech Recognition

We designed our program to meet the process described in the block diagram. To solve the limitation of the voice recognition module only recognizing up to seven commands each time. The program is designed to dynamically load and clear commands according to each mode. The program is initialized with “Idle” mode, in which only two commands “Hey Chair” and “Stop” are loaded from the pre-recorded stop commands. If “Hey Chair” is recognized, the module enters “Awake” mode and clears the loaded commands, and reloads six commands that control the direction(“Left”, “Right”, “Forward”, “Back”), a “Speed” command, and additional stop commands. If “Speed” is recognized, the module enters “Speed Selection” mode and clears the loaded commands from the “Awake” mode, and loads commands “One”, “Two”,” Three” and an additional “Stop” which allows users to control the speed of the wheelchair.

A timer is also programmed with the speech recognition process. If the module has not recognized any commands in 30 seconds, it will reset the mode to “Idle” and the user needs to say “Hey Chair” to re-wake it up.

The designed program successfully incorporates combinations of commands to seamlessly control the wheelchair with less than seven commands loaded each time and incorporates an emergent stop function for safety.

Safety Features

Fail-safe: Both mechanical and digital fail-safes were implemented to ensure the joystick returned to the normal (stop) position if the device failed.

Input validation: The voice recognition module is speaker-dependent. Only the device’s intended user can input voice commands. Other voices will not be recognized responded.

Stop Command: The stop command is the only command that does not require a wake word. In an emergency, time is paramount, and requiring a wake word to stop the device could be potentially fatal. By simply saying “stop,” the user can stop the wheelchair from moving without any wake word commands.

Demo Video

Contribution:

Jay Peskin: Design and 3D-print of the joystick controller and the enclosure. Selection and attachment of the microphone.

Tianyu Qi: Co-design of the joystick controller and enclosure, design of the PCB, and mounting.

Paula Sedlacek and Qixin Deng: Design and software implementation of speech recognition and wheelchair control.

Acknowledgment

We would like to thank our peers, advisors, and professors affiliated with the Audio & Music Engineering Program and the Department of Electrical & Computer Engineering at the University of Rochester for their support and guidance. Special thank goes to our senior design mentors, Michael Heilemann, Tre DiPassio, Dan Phinney, and Sarah Smith, whose invaluable advice and generous sharing of their experience have greatly contributed to our project. We would also like to thank our AME alumnus Daniel Kannen and Nick Bruno for their advice, and Paul Osborne of the Hopeman Shop for assisting us with our mounting and enclosures.