Research Experience for Undergraduates: Computational Methods for Understanding Music, Media, and Minds

How can a computer be trained to discover motifs of polyphonic music? What are the neural encodings of musical features when humans listen to music and associate it with any memories? Can we leverage recent advances in ArtificialIntelligence (AI) and Large Language Models (LLMs) to make communication easier between deaf children and their hearing parents? These are some of the questions that define the intellectual focus of our National Science Foundation Research Experience for Undergraduates (NSF REU) program.

Over ten weeks, students will work on cutting-edge interdisciplinary research at the intersection of artificial intelligence, neuroscience, music, media, and public health. Students are supported with funding from the National Science Foundation and mentored by faculty from computer science, electrical and computer engineering, brain and cognitive sciences, music theory, neuroscience and public health.

Summer 2026

The REU session in 2026 will run from May 26 to August 1.How to Apply/Eligibility

You are eligible to apply if:

- You are a 1st, 2nd, or 3rd year full-time undergraduate student at a college or university.

- You are a U.S. citizen or hold a green card as a permanent resident.

- You will have completed two computer science courses or have equivalent programming experience by the start of the summer program.

We are unable to support international students via this federally-funded NSF REU program. If you are looking for self-funded research opportunities, you can reach out to one of our affiliated faculty members directly to discuss your research interests.

Being a computer science major or having prior research experience are not requirements. We seek to recruit a diverse group of students with varying backgrounds and levels of experience. We encourage applications from students attending non-research institutions and from students from communities underrepresented in computer science.

Before starting the application, you should prepare:

- An unofficial college transcript (a list of your college courses and grades), as a PDF file. Please include the courses you are currently taking.

- Your CV or resume, as a PDF file.

- A personal statement (up to 5000 characters), explaining why you wish to participate in this REU, including how it engages your interests, how the experience would support or help you define your career goals, and special skills and interests you would bring to the program.

- The name and email address of a teacher or supervisor who can recommend you for the REU.

How to Apply:

- Create an NSF ETAP account and fill out all portions of the registration.

- Select our REU program and follow the prompts to apply. The personal statement should explain why you wish to participate in this REU, including how it engages your interests, how the experience would support or help you define your career goals, and special skills and interests you would bring to the program.

- Apply online no later than January 29, 2026.

- Notification of acceptance will be communicated between March 2 and April 9, 2026.

The REU Experience

Students accepted into the REU will receive:

- On-campus housing

- Meal stipend

- A stipend of $7000

- Up to $600 to help pay for travel to and from Rochester

Your experience will include:

- A programming bootcamp to help you learn and/or improve your programming skills in the Python language.

- Performing research with a team of students and faculty on one of the REU projects.

- Professional development activities, including graduate school preparation and career planning.

- Social events and experiences, including opportunities to meet and network with students from other REU programs and members of the University community.

The David T. Kearns Center coordinates summer logistics and activities for all REU programs across the University of Rochester campus, including travel, housing, the REU orientation and the undergraduate research symposium.

Visit our REU summer activities page for more detailed information on programs and events.

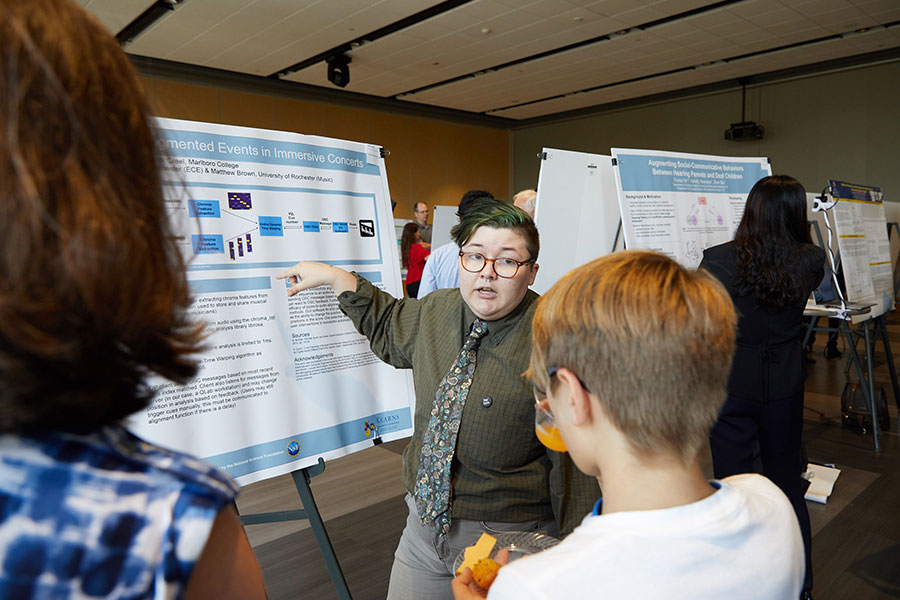

Projects, Participants, and Presentations

Summer 2026 REU Projects

Project #1

Title: Machine Learning for Analyzing Brain Signals Related to Music

Mentors: Mujdat Cetin (ECE, CS, & GIDS), Zhiyao Duan (ECE, CS, & GIDS), Ajay Anand (GIDS & BME)

Abstract: Human brain activity recorded through electroencephalography (EEG) can be used as the basis for Brain-Computer Interfaces (BCIs). Major advances have been achieved in the analysis of brain responses to visual stimuli as well as of imagined motor movements over the last two decades. Recent focus has shifted toward analyzing cognitive and emotional states of healthy individuals. In this context, this REU project involves automatic analysis of human brain patterns as a person listens to or imagines a piece of music. REU students will examine connections between music and the mind by measuring brain signals during music listening and imagination, as well as by developing machine learning-based methods for automatic analysis of such brain signals. One component of the project involves analyzing the responses of the human brain to various aspects of the played music including melody, rhythm, and timbre. Another component will involve analyzing brain signal patterns generated in the process of music imagination or mental playing. The project brings together the three mentors’ complementary expertise: brain-computer interfaces (Cetin), music information retrieval (Duan), and biomedical instrumentation and time-series analysis (Anand), with the overarching connection being novel computational methods including machine learning.

Project # 2

Title: Marketing, Advertisement, and User’s Perceptions of IQOS on Social Media

Mentors: Dongmei Li (Clinical and Translational Research & GIDS), Hangfeng He (GIDS & CS), and Zidian Xie (Clinical and Translational Research & GIDS)

Abstract: IQOS is a new heated tobacco product from Philip Morris International (PMI) with high popularity in Japan and South Korea. In July 2020, the US FDA authorized the marketing of IQOS as a “reduced exposure” tobacco product. PMI now plans to manufacture IQOS products within the US. Businesses commonly use social media platforms such as Twitter (now X) and TikTok to promote IQOS, and users share their opinions and perceptions there. This project aims to examine the strategies and techniques used in IQOS marketing and advertisements, and the public perceptions and discussions of IQOS through mining Twitter data (already archived) and analyzing TikTok videos (API access approved for research purposes) using natural language processing, large language models with video and audio understanding (e.g., Video-LLaMA), and statistical methods. REU students will use sentiment analysis, topic modeling, time series analysis, and multi-modal learning to explore social media users’ perceptions and discussions of IQOS in Japan and South Korea before and after the FDA authorization. Results from the proposed project can inform the FDA of the marketing influences and public perceptions of IQOS for future regulation of such products in the US.

Project # 3

Title: Music Motif Discovery with Self-Supervised Learning

Mentors: Zhiyao Duan (ECE, CS & GIDS), David Temperley (Music Theory and Cognition), and Matthew Brown (Music Theory)

Abstract: Motifs are short fragments of music that appear repeatedly with variations throughout a piece of music. They have special importance in and are often characteristic of a music composition. This project aims to automatically identify motifs through self-supervised learning without the requirement of human labeled motifs as training data. The idea is to train a neural network that recognizes musical fragments and their different variations as similar, where the variations are artificially generated (i.e., data augmentation) through musically meaningful transformations such as pitch shifting, time stretching, modulation, inversion, and perturbation in rhythm. After the network is trained, it will be able to evaluate the similarity between any fragments of a music piece and identify those that appear repeatedly as motifs. REU students will contribute to 1) designing musically meaningful variations of musical fragments to generate the self-supervised learning dataset, 2) developing and training a neural network for the evaluation of similarity between musical fragments, and 3) implementing an inference algorithm to identify motifs. The mentoring team provides complementary expertise on music information retrieval (Duan) and music theory and cognition (Temperley and Brown).

Project # 4

Title: Assistive Technology in Supporting Face-to-Face Communication

Mentors: Zhen Bai (CS)

Face-to-face communication is central to human life, and irreplaceable by the surge of technical innovations. Language barriers and social difficulties, however, often lead to challenges in everyday communication and collaboration, impacting people with special needs like Deaf and Hard of Hearing and autism, as well as people in heterogenous teams with members of different genders and ethnic groups. This project aims to design and develop innovative AI-mediated multimodal communication technologies that assist efficient communication for all individuals during face-to-face interaction, from parent-child interactions to small group learning and work.

We invite REU students to participate in the design, prototyping, and evaluation of the proposed technology that matches with their own interests and skills. We seek self-driven and inquisitive students to join our project and will offer opportunities to obtain technical and research experiences in one or more of the following areas: Augmented and Virtual reality, Human-Computer Interaction, Natural Language Processing/LLMs, Machine Vision, and Human-AI Collaboration.

Project # 5

Title: Multiuser Multimodal Mixed Reality Interface Optimization and Adaptation

Mentor: Yukang Yan (Computer Science)

Mixed Reality (MR) interfaces have strong potential to support multi-user scenarios such as social events, remote collaboration, and entertainment. A core challenge is designing multimodal interfaces (visual, auditory, haptic) that adapt to users’ diverse physical environments. Displaying a fixed interface for all users can be suboptimal, for example, playing audio identically in a quiet versus a noisy environment, or for someone carefully listening versus actively conversing. This project approaches the problem from an optimization perspective: taking an understanding of user status and environmental requirements as input, computationally estimating usability, semantic consistency, and interaction experience across interface representations, and identifying the configurations that best satisfy these objectives. REU students will (1) conduct formative studies to understand user preferences in multimodal MR interaction; (2) develop optimization algorithms for generating and adapting MR interfaces; (3) design demonstrative applications; and (4) run evaluation studies and analyze results to assess effectiveness.

Visit our past REU sessions page for more information on previous projects, participants, and presentations.

Contact

Email gids-reu@ur.rochester.edu for more information.

PI

Ajay Anand, PhD

Deputy Director

Professor

Goergen Institute of Data Science

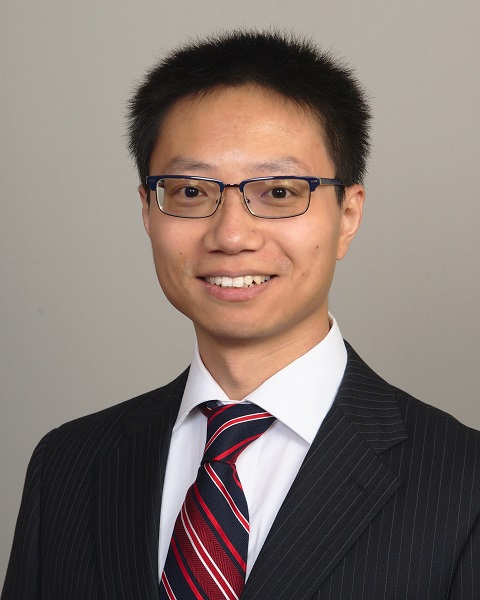

Co-PI

Zhiyao Duan

Professor

Department of Electrical and Computer Engineering

Department of Computer Science