Research Opportunities

As part of a top-tier research institution, the University of Rochester electrical and computer engineering program is committed to providing undergraduates with resources to explore opportunities for participation in research.

Getting Started

The first step to working on a research project is getting to know the sort of research done within the department. Talk to with faculty and senior students, and visit the research and faculty pages to see what research is being done and by whom.

Student Research Resources

The University of Rochester provides significant assistance to undergraduate students to enable their engagement in research. Please visit the Office of Undergraduate Research to learn more about the opportunities and funding available.

Another point of interest is: Journal of Undergraduate Research (JUR)

Recent Research Projects

Ultrasound Imaging—Vakhtang (Vato) Chulukhadze '22 and Mathias Hansen '22

Vakhtang Chulukhadze 22’ and Mathias Hansen 22’

Vakhtang Chulukhadze 22’ and Mathias Hansen 22’

Supervised by Marvin Doyley

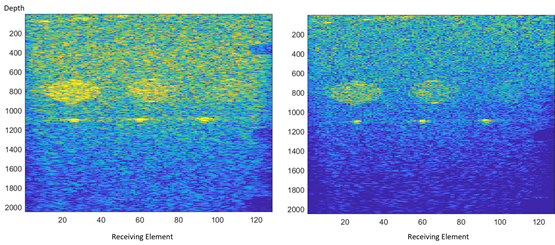

In medical ultrasound, arrays of piezoelectric transducers are used to image biological tissue. Beamforming algorithms are applied to echo signals that are reflected to the transducers from internal tissue structures to form ultrasound images. Currently, the most popular algorithm is the delay-and-sum (DAS). Computationally DAS is very efficient and easy to implement in hardware; however, the resulting images often contain sidelobes and other artifacts which degrade image quality. Adaptive beamforming methods provides higher quality images at higher computational cost.

Throughout the summer of 2021, Vakhtang (Vato) Chulukhadze and Mathias Hansen worked with Professor Marvin Doyley in the Parametric Imaging Research Laboratory to develop a computationally efficient adaptive beamforming method (i.e., the minimum variance distortionless beamformer (MVDR)). Vato and Mathias demonstrated that MVDR reduced sidelobes noticeably. To improve computational speed, they implemented the algorithm on a graphical processing unit (GPU) using Nvidia’s CUDA parallel computing platform.

The students were able to deliver high quality images of an object in real-time. The figure below shows the difference between images created using the DAS algorithm (left) and the MVDR algorithm (right).

Robotics and artificial intelligence—Harel Biggie '18

Harel Biggie, ECE '18

Harel Biggie was an undergraduate research assistant in Professor Thomas Howard’s Robotics and Artificial Intelligence Laboratory from 2016 through 2018.

Harel’s research was concerned with adaptive representations for unmanned ground vehicle decision making in support of activities for bidirectional communication for human-robot teaming. His work in the laboratory contributed to three peer-reviewed conference papers at international robotics conferences. His first paper, which he co-authored with PhD students Jacob Arkin and Michael Napoli, Professor Matthew Walter from the Toyota Technological Institute at Chicago, and Dr. Adrian Boteanu and Professor Hadas Kress-Gazit from Cornell University, involved interpreting monologues of instructions for autonomous robots. Harel led the physical experiments involving navigation of one of our Clearpath Robotics Husky A200 unmanned ground vehicles from sequences of language interactions.

Harel completed his bachelor of science in electrical and computer engineering in 2018 and is now a PhD student in robotics at the University of Colorado at Boulder.

Robotics and artificial intelligence—Ethan Fahnestock '21

Ethan Fahnestock, IDE '21

Ethan Fahnestock was an undergraduate research assistant in Professor Thomas Howard's Robotics and Artificial Intelligence Laboratory from 2018-2021.

Ethan Fahnestock was an undergraduate research assistant in Professor Thomas Howard's Robotics and Artificial Intelligence Laboratory from 2018-2021.

Ethan's work in the laboratory spanned two different research projects. The first project involved developing novel approaches that enable robots to understand language instructions in partially observed environments. This work, which he performed in collaboration with PhD student Siddharth Patki and Professor Matthew Walter from the Toyota Technological Institute at Chicago, led to one peer reviewed conference paper at the Conference on Robot Learning and one peer-reviewed symposium paper at the AAAI Fall Symposium Series on Artificial Intelligence for Human-Robot Interaction. Ethan was the lead author for the latter paper and presented his work at the meeting in Washington, D.C. in November 2019.

The second project involved developing novel motion planning algorithms for unmanned ground vehicles. This work, which he performed in collaboration with undergraduate research assistant Benned Hedegaard and PhD students Jacob Arkin and Ashwin Menon, led to a peer reviewed conference paper at the IEEE/RSJ International Conference on Intelligent Robots and Systems on a novel approach for efficient adaptation in recombinant motion planning search spaces, which Ethan co-authored.

Ethan completed bachelor of science degrees in physics and astronomy and interdepartmental engineering on the topic of robotics in spring 2021 and is now a member of the MIT-WHOI joint program pursuing a PhD degree in electrical engineering and computer science and applied ocean science and engineering.

Vibro-acoustic interfaces—Seth Roberts and Geoffrey Kulp '23

Seth Roberts, AME '23 and Geoffrey Kulp, AME '23 supervised by Professor Michael Heilemann

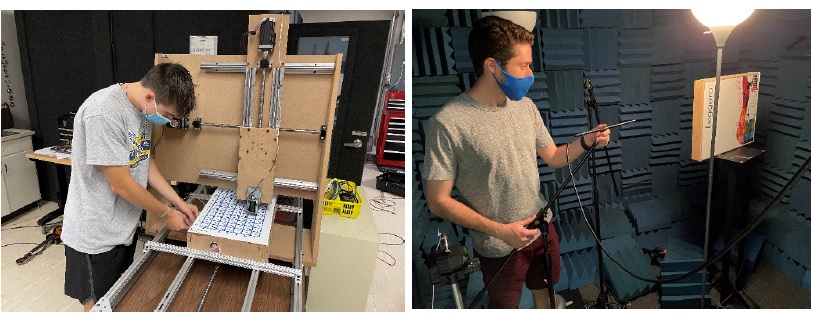

Over the course of the last decade, smart devices have become essential components of daily life. However, apart from conversational assistants, most smart devices and environments rely primarily on presenting information visually, a poor fit for many situations where users’ visual attention is on something besides the device. One way to support these situations is to let people use sound or touch for interactions. However, adding separate microphones, loudspeakers, and vibration mechanisms using conventional means can impose extra costs and reduce the device’s durability and aesthetics.

This project seeks to develop alternative technologies for recording and reproducing spatial audio through bending vibrations on flat surfaces ranging from smartphones to video walls. These vibrations can also allow the smart acoustic surface to serve as a touch interface. The advantage of this approach lies in the fact that the surface is already part of the device, allowing the device to maintain its durability and aesthetics while incorporating these new features. Adding spatial audio and haptic feedback to OLED displays, smartphones, and video walls will provide fundamental technology to improve people’s ability to navigate complex data sets such as menus and maps, and enable a greater sense of immersion for remote applications such as video conference calls.

Juniors Seth Roberts and Geoffrey Kulp have helped design prototype panels to demonstrate the effectiveness of these new vibro-acoustic interfaces. This work is supported by NSF Grant #2104758.

Robot motion planning for unmanned ground vehicles—Benned Hedegaard '22

Benned Hedegaard, CSC '22 supervised by Professor Thomas Howard

Benned Hedegaard is an undergraduate research assistant in Professor Thomas Howard's Robotics and Artificial Intelligence Laboratory and a double major in computer science and linguistics.

Benned Hedegaard is an undergraduate research assistant in Professor Thomas Howard's Robotics and Artificial Intelligence Laboratory and a double major in computer science and linguistics.

Benned joined the laboratory in spring 2020 on a project involving robot motion planning for unmanned ground vehicles as part of the Army Research Laboratory’s Scalable, Adaptive, and Resilient Autonomy. This research, which he performed in collaboration with Ethan Fahnestock, Jacob Arkin, and Ashwin Menon, led to a peer-reviewed conference paper titled “Discrete optimization of adaptive state lattices for iterative motion planning on unmanned ground vehicles” which he led as first author and presented at the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems.

Most recently, Benned joined a separate project supported by the Army Research Laboratory’s Distributed and Collaborative Intelligent Systems and Technology Collaborative Research Alliance on developing new approaches for grounded language communication in human-robot teams.

Benned is also a member of the Robotics Club Lunabotics Team, which aims to develop a semi-autonomous robotic excavator for the 2022 NASA Lunabotics competition.